diff --git a/dmc2gym/__init__.py b/dmc2gym/__init__.py

new file mode 100644

index 0000000..7c1d277

--- /dev/null

+++ b/dmc2gym/__init__.py

@@ -0,0 +1,52 @@

+import gym

+from gym.envs.registration import register

+

+

+def make(

+ domain_name,

+ task_name,

+ resource_files,

+ img_source,

+ total_frames,

+ seed=1,

+ visualize_reward=True,

+ from_pixels=False,

+ height=84,

+ width=84,

+ camera_id=0,

+ frame_skip=1,

+ episode_length=1000,

+ environment_kwargs=None

+):

+ env_id = 'dmc_%s_%s_%s-v1' % (domain_name, task_name, seed)

+

+ if from_pixels:

+ assert not visualize_reward, 'cannot use visualize reward when learning from pixels'

+

+ # shorten episode length

+ max_episode_steps = (episode_length + frame_skip - 1) // frame_skip

+

+ if not env_id in gym.envs.registry.env_specs:

+ register(

+ id=env_id,

+ entry_point='dmc2gym.wrappers:DMCWrapper',

+ kwargs={

+ 'domain_name': domain_name,

+ 'task_name': task_name,

+ 'resource_files': resource_files,

+ 'img_source': img_source,

+ 'total_frames': total_frames,

+ 'task_kwargs': {

+ 'random': seed

+ },

+ 'environment_kwargs': environment_kwargs,

+ 'visualize_reward': visualize_reward,

+ 'from_pixels': from_pixels,

+ 'height': height,

+ 'width': width,

+ 'camera_id': camera_id,

+ 'frame_skip': frame_skip,

+ },

+ max_episode_steps=max_episode_steps

+ )

+ return gym.make(env_id)

diff --git a/dmc2gym/natural_imgsource.py b/dmc2gym/natural_imgsource.py

new file mode 100644

index 0000000..42ef62f

--- /dev/null

+++ b/dmc2gym/natural_imgsource.py

@@ -0,0 +1,183 @@

+

+# Copyright (c) Facebook, Inc. and its affiliates.

+# All rights reserved.

+#

+# This source code is licensed under the license found in the

+# LICENSE file in the root directory of this source tree.

+

+import numpy as np

+import cv2

+import skvideo.io

+import random

+import tqdm

+

+class BackgroundMatting(object):

+ """

+ Produce a mask by masking the given color. This is a simple strategy

+ but effective for many games.

+ """

+ def __init__(self, color):

+ """

+ Args:

+ color: a (r, g, b) tuple or single value for grayscale

+ """

+ self._color = color

+

+ def get_mask(self, img):

+ return img == self._color

+

+

+class ImageSource(object):

+ """

+ Source of natural images to be added to a simulated environment.

+ """

+ def get_image(self):

+ """

+ Returns:

+ an RGB image of [h, w, 3] with a fixed shape.

+ """

+ pass

+

+ def reset(self):

+ """ Called when an episode ends. """

+ pass

+

+

+class FixedColorSource(ImageSource):

+ def __init__(self, shape, color):

+ """

+ Args:

+ shape: [h, w]

+ color: a 3-tuple

+ """

+ self.arr = np.zeros((shape[0], shape[1], 3))

+ self.arr[:, :] = color

+

+ def get_image(self):

+ return self.arr

+

+

+class RandomColorSource(ImageSource):

+ def __init__(self, shape):

+ """

+ Args:

+ shape: [h, w]

+ """

+ self.shape = shape

+ self.arr = None

+ self.reset()

+

+ def reset(self):

+ self._color = np.random.randint(0, 256, size=(3,))

+ self.arr = np.zeros((self.shape[0], self.shape[1], 3))

+ self.arr[:, :] = self._color

+

+ def get_image(self):

+ return self.arr

+

+

+class NoiseSource(ImageSource):

+ def __init__(self, shape, strength=255):

+ """

+ Args:

+ shape: [h, w]

+ strength (int): the strength of noise, in range [0, 255]

+ """

+ self.shape = shape

+ self.strength = strength

+

+ def get_image(self):

+ return np.random.randn(self.shape[0], self.shape[1], 3) * self.strength

+

+

+class RandomImageSource(ImageSource):

+ def __init__(self, shape, filelist, total_frames=None, grayscale=False):

+ """

+ Args:

+ shape: [h, w]

+ filelist: a list of image files

+ """

+ self.grayscale = grayscale

+ self.total_frames = total_frames

+ self.shape = shape

+ self.filelist = filelist

+ self.build_arr()

+ self.current_idx = 0

+ self.reset()

+

+ def build_arr(self):

+ self.total_frames = self.total_frames if self.total_frames else len(self.filelist)

+ self.arr = np.zeros((self.total_frames, self.shape[0], self.shape[1]) + ((3,) if not self.grayscale else (1,)))

+ for i in range(self.total_frames):

+ # if i % len(self.filelist) == 0: random.shuffle(self.filelist)

+ fname = self.filelist[i % len(self.filelist)]

+ if self.grayscale: im = cv2.imread(fname, cv2.IMREAD_GRAYSCALE)[..., None]

+ else: im = cv2.imread(fname, cv2.IMREAD_COLOR)

+ self.arr[i] = cv2.resize(im, (self.shape[1], self.shape[0])) ## THIS IS NOT A BUG! cv2 uses (width, height)

+

+ def reset(self):

+ self._loc = np.random.randint(0, self.total_frames)

+

+ def get_image(self):

+ return self.arr[self._loc]

+

+

+class RandomVideoSource(ImageSource):

+ def __init__(self, shape, filelist, total_frames=None, grayscale=False):

+ """

+ Args:

+ shape: [h, w]

+ filelist: a list of video files

+ """

+ self.grayscale = grayscale

+ self.total_frames = total_frames

+ self.shape = shape

+ self.filelist = filelist

+ self.build_arr()

+ self.current_idx = 0

+ self.reset()

+

+ def build_arr(self):

+ if not self.total_frames:

+ self.total_frames = 0

+ self.arr = None

+ random.shuffle(self.filelist)

+ for fname in tqdm.tqdm(self.filelist, desc="Loading videos for natural", position=0):

+ if self.grayscale: frames = skvideo.io.vread(fname, outputdict={"-pix_fmt": "gray"})

+ else: frames = skvideo.io.vread(fname)

+ local_arr = np.zeros((frames.shape[0], self.shape[0], self.shape[1]) + ((3,) if not self.grayscale else (1,)))

+ for i in tqdm.tqdm(range(frames.shape[0]), desc="video frames", position=1):

+ local_arr[i] = cv2.resize(frames[i], (self.shape[1], self.shape[0])) ## THIS IS NOT A BUG! cv2 uses (width, height)

+ if self.arr is None:

+ self.arr = local_arr

+ else:

+ self.arr = np.concatenate([self.arr, local_arr], 0)

+ self.total_frames += local_arr.shape[0]

+ else:

+ self.arr = np.zeros((self.total_frames, self.shape[0], self.shape[1]) + ((3,) if not self.grayscale else (1,)))

+ total_frame_i = 0

+ file_i = 0

+ with tqdm.tqdm(total=self.total_frames, desc="Loading videos for natural") as pbar:

+ while total_frame_i < self.total_frames:

+ if file_i % len(self.filelist) == 0: random.shuffle(self.filelist)

+ file_i += 1

+ fname = self.filelist[file_i % len(self.filelist)]

+ if self.grayscale: frames = skvideo.io.vread(fname, outputdict={"-pix_fmt": "gray"})

+ else: frames = skvideo.io.vread(fname)

+ for frame_i in range(frames.shape[0]):

+ if total_frame_i >= self.total_frames: break

+ if self.grayscale:

+ self.arr[total_frame_i] = cv2.resize(frames[frame_i], (self.shape[1], self.shape[0]))[..., None] ## THIS IS NOT A BUG! cv2 uses (width, height)

+ else:

+ self.arr[total_frame_i] = cv2.resize(frames[frame_i], (self.shape[1], self.shape[0]))

+ pbar.update(1)

+ total_frame_i += 1

+

+

+ def reset(self):

+ self._loc = np.random.randint(0, self.total_frames)

+

+ def get_image(self):

+ img = self.arr[self._loc % self.total_frames]

+ self._loc += 1

+ return img

diff --git a/dmc2gym/wrappers.py b/dmc2gym/wrappers.py

new file mode 100644

index 0000000..077f2eb

--- /dev/null

+++ b/dmc2gym/wrappers.py

@@ -0,0 +1,198 @@

+from gym import core, spaces

+import glob

+import os

+import local_dm_control_suite as suite

+from dm_env import specs

+import numpy as np

+import skimage.io

+

+from dmc2gym import natural_imgsource

+

+

+def _spec_to_box(spec):

+ def extract_min_max(s):

+ assert s.dtype == np.float64 or s.dtype == np.float32

+ dim = np.int(np.prod(s.shape))

+ if type(s) == specs.Array:

+ bound = np.inf * np.ones(dim, dtype=np.float32)

+ return -bound, bound

+ elif type(s) == specs.BoundedArray:

+ zeros = np.zeros(dim, dtype=np.float32)

+ return s.minimum + zeros, s.maximum + zeros

+

+ mins, maxs = [], []

+ for s in spec:

+ mn, mx = extract_min_max(s)

+ mins.append(mn)

+ maxs.append(mx)

+ low = np.concatenate(mins, axis=0)

+ high = np.concatenate(maxs, axis=0)

+ assert low.shape == high.shape

+ return spaces.Box(low, high, dtype=np.float32)

+

+

+def _flatten_obs(obs):

+ obs_pieces = []

+ for v in obs.values():

+ flat = np.array([v]) if np.isscalar(v) else v.ravel()

+ obs_pieces.append(flat)

+ return np.concatenate(obs_pieces, axis=0)

+

+

+class DMCWrapper(core.Env):

+ def __init__(

+ self,

+ domain_name,

+ task_name,

+ resource_files,

+ img_source,

+ total_frames,

+ task_kwargs=None,

+ visualize_reward={},

+ from_pixels=False,

+ height=84,

+ width=84,

+ camera_id=0,

+ frame_skip=1,

+ environment_kwargs=None

+ ):

+ assert 'random' in task_kwargs, 'please specify a seed, for deterministic behaviour'

+ self._from_pixels = from_pixels

+ self._height = height

+ self._width = width

+ self._camera_id = camera_id

+ self._frame_skip = frame_skip

+ self._img_source = img_source

+

+ # create task

+ self._env = suite.load(

+ domain_name=domain_name,

+ task_name=task_name,

+ task_kwargs=task_kwargs,

+ visualize_reward=visualize_reward,

+ environment_kwargs=environment_kwargs

+ )

+

+ # true and normalized action spaces

+ self._true_action_space = _spec_to_box([self._env.action_spec()])

+ self._norm_action_space = spaces.Box(

+ low=-1.0,

+ high=1.0,

+ shape=self._true_action_space.shape,

+ dtype=np.float32

+ )

+

+ # create observation space

+ if from_pixels:

+ self._observation_space = spaces.Box(

+ low=0, high=255, shape=[3, height, width], dtype=np.uint8

+ )

+ else:

+ self._observation_space = _spec_to_box(

+ self._env.observation_spec().values()

+ )

+

+ self._internal_state_space = spaces.Box(

+ low=-np.inf,

+ high=np.inf,

+ shape=self._env.physics.get_state().shape,

+ dtype=np.float32

+ )

+

+ # background

+ if img_source is not None:

+ shape2d = (height, width)

+ if img_source == "color":

+ self._bg_source = natural_imgsource.RandomColorSource(shape2d)

+ elif img_source == "noise":

+ self._bg_source = natural_imgsource.NoiseSource(shape2d)

+ else:

+ files = glob.glob(os.path.expanduser(resource_files))

+ assert len(files), "Pattern {} does not match any files".format(

+ resource_files

+ )

+ if img_source == "images":

+ self._bg_source = natural_imgsource.RandomImageSource(shape2d, files, grayscale=True, total_frames=total_frames)

+ elif img_source == "video":

+ self._bg_source = natural_imgsource.RandomVideoSource(shape2d, files, grayscale=True, total_frames=total_frames)

+ else:

+ raise Exception("img_source %s not defined." % img_source)

+

+ # set seed

+ self.seed(seed=task_kwargs.get('random', 1))

+

+ def __getattr__(self, name):

+ return getattr(self._env, name)

+

+ def _get_obs(self, time_step):

+ if self._from_pixels:

+ obs = self.render(

+ height=self._height,

+ width=self._width,

+ camera_id=self._camera_id

+ )

+ if self._img_source is not None:

+ mask = np.logical_and((obs[:, :, 2] > obs[:, :, 1]), (obs[:, :, 2] > obs[:, :, 0])) # hardcoded for dmc

+ bg = self._bg_source.get_image()

+ obs[mask] = bg[mask]

+ obs = obs.transpose(2, 0, 1).copy()

+ else:

+ obs = _flatten_obs(time_step.observation)

+ return obs

+

+ def _convert_action(self, action):

+ action = action.astype(np.float64)

+ true_delta = self._true_action_space.high - self._true_action_space.low

+ norm_delta = self._norm_action_space.high - self._norm_action_space.low

+ action = (action - self._norm_action_space.low) / norm_delta

+ action = action * true_delta + self._true_action_space.low

+ action = action.astype(np.float32)

+ return action

+

+ @property

+ def observation_space(self):

+ return self._observation_space

+

+ @property

+ def internal_state_space(self):

+ return self._internal_state_space

+

+ @property

+ def action_space(self):

+ return self._norm_action_space

+

+ def seed(self, seed):

+ self._true_action_space.seed(seed)

+ self._norm_action_space.seed(seed)

+ self._observation_space.seed(seed)

+

+ def step(self, action):

+ assert self._norm_action_space.contains(action)

+ action = self._convert_action(action)

+ assert self._true_action_space.contains(action)

+ reward = 0

+ extra = {'internal_state': self._env.physics.get_state().copy()}

+

+ for _ in range(self._frame_skip):

+ time_step = self._env.step(action)

+ reward += time_step.reward or 0

+ done = time_step.last()

+ if done:

+ break

+ obs = self._get_obs(time_step)

+ extra['discount'] = time_step.discount

+ return obs, reward, done, extra

+

+ def reset(self):

+ time_step = self._env.reset()

+ obs = self._get_obs(time_step)

+ return obs

+

+ def render(self, mode='rgb_array', height=None, width=None, camera_id=0):

+ assert mode == 'rgb_array', 'only support rgb_array mode, given %s' % mode

+ height = height or self._height

+ width = width or self._width

+ camera_id = camera_id or self._camera_id

+ return self._env.physics.render(

+ height=height, width=width, camera_id=camera_id

+ )

diff --git a/local_dm_control_suite/README.md b/local_dm_control_suite/README.md

new file mode 100755

index 0000000..135ab42

--- /dev/null

+++ b/local_dm_control_suite/README.md

@@ -0,0 +1,56 @@

+# DeepMind Control Suite.

+

+This submodule contains the domains and tasks described in the

+[DeepMind Control Suite tech report](https://arxiv.org/abs/1801.00690).

+

+## Quickstart

+

+```python

+from dm_control import suite

+import numpy as np

+

+# Load one task:

+env = suite.load(domain_name="cartpole", task_name="swingup")

+

+# Iterate over a task set:

+for domain_name, task_name in suite.BENCHMARKING:

+ env = suite.load(domain_name, task_name)

+

+# Step through an episode and print out reward, discount and observation.

+action_spec = env.action_spec()

+time_step = env.reset()

+while not time_step.last():

+ action = np.random.uniform(action_spec.minimum,

+ action_spec.maximum,

+ size=action_spec.shape)

+ time_step = env.step(action)

+ print(time_step.reward, time_step.discount, time_step.observation)

+```

+

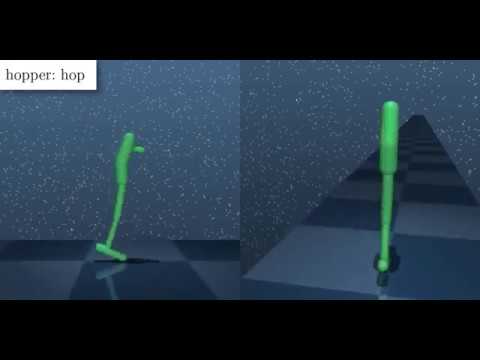

+## Illustration video

+

+Below is a video montage of solved Control Suite tasks, with reward

+visualisation enabled.

+

+[](https://www.youtube.com/watch?v=rAai4QzcYbs)

+

+

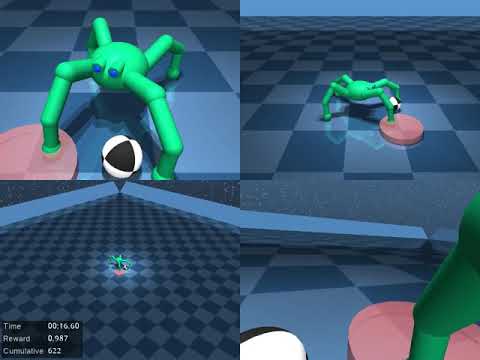

+### Quadruped domain [April 2019]

+

+Roughly based on the 'ant' model introduced by [Schulman et al. 2015](https://arxiv.org/abs/1506.02438). Main modifications to the body are:

+

+- 4 DoFs per leg, 1 constraining tendon.

+- 3 actuators per leg: 'yaw', 'lift', 'extend'.

+- Filtered position actuators with timescale of 100ms.

+- Sensors include an IMU, force/torque sensors, and rangefinders.

+

+Four tasks:

+

+- `walk` and `run`: self-right the body then move forward at a desired speed.

+- `escape`: escape a bowl-shaped random terrain (uses rangefinders).

+- `fetch`, go to a moving ball and bring it to a target.

+

+All behaviors in the video below were trained with [Abdolmaleki et al's

+MPO](https://arxiv.org/abs/1806.06920).

+

+[](https://www.youtube.com/watch?v=RhRLjbb7pBE)

diff --git a/local_dm_control_suite/__init__.py b/local_dm_control_suite/__init__.py

new file mode 100755

index 0000000..c4d7cb9

--- /dev/null

+++ b/local_dm_control_suite/__init__.py

@@ -0,0 +1,151 @@

+# Copyright 2017 The dm_control Authors.

+#

+# Licensed under the Apache License, Version 2.0 (the "License");

+# you may not use this file except in compliance with the License.

+# You may obtain a copy of the License at

+#

+# http://www.apache.org/licenses/LICENSE-2.0

+#

+# Unless required by applicable law or agreed to in writing, software

+# distributed under the License is distributed on an "AS IS" BASIS,

+# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

+# See the License for the specific language governing permissions and

+# limitations under the License.

+# ============================================================================

+

+"""A collection of MuJoCo-based Reinforcement Learning environments."""

+

+from __future__ import absolute_import

+from __future__ import division

+from __future__ import print_function

+

+import collections

+import inspect

+import itertools

+

+from dm_control.rl import control

+

+from local_dm_control_suite import acrobot

+from local_dm_control_suite import ball_in_cup

+from local_dm_control_suite import cartpole

+from local_dm_control_suite import cheetah

+from local_dm_control_suite import finger

+from local_dm_control_suite import fish

+from local_dm_control_suite import hopper

+from local_dm_control_suite import humanoid

+from local_dm_control_suite import humanoid_CMU

+from local_dm_control_suite import lqr

+from local_dm_control_suite import manipulator

+from local_dm_control_suite import pendulum

+from local_dm_control_suite import point_mass

+from local_dm_control_suite import quadruped

+from local_dm_control_suite import reacher

+from local_dm_control_suite import stacker

+from local_dm_control_suite import swimmer

+from local_dm_control_suite import walker

+

+# Find all domains imported.

+_DOMAINS = {name: module for name, module in locals().items()

+ if inspect.ismodule(module) and hasattr(module, 'SUITE')}

+

+

+def _get_tasks(tag):

+ """Returns a sequence of (domain name, task name) pairs for the given tag."""

+ result = []

+

+ for domain_name in sorted(_DOMAINS.keys()):

+ domain = _DOMAINS[domain_name]

+

+ if tag is None:

+ tasks_in_domain = domain.SUITE

+ else:

+ tasks_in_domain = domain.SUITE.tagged(tag)

+

+ for task_name in tasks_in_domain.keys():

+ result.append((domain_name, task_name))

+

+ return tuple(result)

+

+

+def _get_tasks_by_domain(tasks):

+ """Returns a dict mapping from task name to a tuple of domain names."""

+ result = collections.defaultdict(list)

+

+ for domain_name, task_name in tasks:

+ result[domain_name].append(task_name)

+

+ return {k: tuple(v) for k, v in result.items()}

+

+

+# A sequence containing all (domain name, task name) pairs.

+ALL_TASKS = _get_tasks(tag=None)

+

+# Subsets of ALL_TASKS, generated via the tag mechanism.

+BENCHMARKING = _get_tasks('benchmarking')

+EASY = _get_tasks('easy')

+HARD = _get_tasks('hard')

+EXTRA = tuple(sorted(set(ALL_TASKS) - set(BENCHMARKING)))

+

+# A mapping from each domain name to a sequence of its task names.

+TASKS_BY_DOMAIN = _get_tasks_by_domain(ALL_TASKS)

+

+

+def load(domain_name, task_name, task_kwargs=None, environment_kwargs=None,

+ visualize_reward=False):

+ """Returns an environment from a domain name, task name and optional settings.

+

+ ```python

+ env = suite.load('cartpole', 'balance')

+ ```

+

+ Args:

+ domain_name: A string containing the name of a domain.

+ task_name: A string containing the name of a task.

+ task_kwargs: Optional `dict` of keyword arguments for the task.

+ environment_kwargs: Optional `dict` specifying keyword arguments for the

+ environment.

+ visualize_reward: Optional `bool`. If `True`, object colours in rendered

+ frames are set to indicate the reward at each step. Default `False`.

+

+ Returns:

+ The requested environment.

+ """

+ return build_environment(domain_name, task_name, task_kwargs,

+ environment_kwargs, visualize_reward)

+

+

+def build_environment(domain_name, task_name, task_kwargs=None,

+ environment_kwargs=None, visualize_reward=False):

+ """Returns an environment from the suite given a domain name and a task name.

+

+ Args:

+ domain_name: A string containing the name of a domain.

+ task_name: A string containing the name of a task.

+ task_kwargs: Optional `dict` specifying keyword arguments for the task.

+ environment_kwargs: Optional `dict` specifying keyword arguments for the

+ environment.

+ visualize_reward: Optional `bool`. If `True`, object colours in rendered

+ frames are set to indicate the reward at each step. Default `False`.

+

+ Raises:

+ ValueError: If the domain or task doesn't exist.

+

+ Returns:

+ An instance of the requested environment.

+ """

+ if domain_name not in _DOMAINS:

+ raise ValueError('Domain {!r} does not exist.'.format(domain_name))

+

+ domain = _DOMAINS[domain_name]

+

+ if task_name not in domain.SUITE:

+ raise ValueError('Level {!r} does not exist in domain {!r}.'.format(

+ task_name, domain_name))

+

+ task_kwargs = task_kwargs or {}

+ if environment_kwargs is not None:

+ task_kwargs = task_kwargs.copy()

+ task_kwargs['environment_kwargs'] = environment_kwargs

+ env = domain.SUITE[task_name](**task_kwargs)

+ env.task.visualize_reward = visualize_reward

+ return env

diff --git a/local_dm_control_suite/acrobot.py b/local_dm_control_suite/acrobot.py

new file mode 100755

index 0000000..a12b892

--- /dev/null

+++ b/local_dm_control_suite/acrobot.py

@@ -0,0 +1,127 @@

+# Copyright 2017 The dm_control Authors.

+#

+# Licensed under the Apache License, Version 2.0 (the "License");

+# you may not use this file except in compliance with the License.

+# You may obtain a copy of the License at

+#

+# http://www.apache.org/licenses/LICENSE-2.0

+#

+# Unless required by applicable law or agreed to in writing, software

+# distributed under the License is distributed on an "AS IS" BASIS,

+# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

+# See the License for the specific language governing permissions and

+# limitations under the License.

+# ============================================================================

+

+"""Acrobot domain."""

+

+from __future__ import absolute_import

+from __future__ import division

+from __future__ import print_function

+

+import collections

+

+from dm_control import mujoco

+from dm_control.rl import control

+from local_dm_control_suite import base

+from local_dm_control_suite import common

+from dm_control.utils import containers

+from dm_control.utils import rewards

+import numpy as np

+

+_DEFAULT_TIME_LIMIT = 10

+SUITE = containers.TaggedTasks()

+

+

+def get_model_and_assets():

+ """Returns a tuple containing the model XML string and a dict of assets."""

+ return common.read_model('acrobot.xml'), common.ASSETS

+

+

+@SUITE.add('benchmarking')

+def swingup(time_limit=_DEFAULT_TIME_LIMIT, random=None,

+ environment_kwargs=None):

+ """Returns Acrobot balance task."""

+ physics = Physics.from_xml_string(*get_model_and_assets())

+ task = Balance(sparse=False, random=random)

+ environment_kwargs = environment_kwargs or {}

+ return control.Environment(

+ physics, task, time_limit=time_limit, **environment_kwargs)

+

+

+@SUITE.add('benchmarking')

+def swingup_sparse(time_limit=_DEFAULT_TIME_LIMIT, random=None,

+ environment_kwargs=None):

+ """Returns Acrobot sparse balance."""

+ physics = Physics.from_xml_string(*get_model_and_assets())

+ task = Balance(sparse=True, random=random)

+ environment_kwargs = environment_kwargs or {}

+ return control.Environment(

+ physics, task, time_limit=time_limit, **environment_kwargs)

+

+

+class Physics(mujoco.Physics):

+ """Physics simulation with additional features for the Acrobot domain."""

+

+ def horizontal(self):

+ """Returns horizontal (x) component of body frame z-axes."""

+ return self.named.data.xmat[['upper_arm', 'lower_arm'], 'xz']

+

+ def vertical(self):

+ """Returns vertical (z) component of body frame z-axes."""

+ return self.named.data.xmat[['upper_arm', 'lower_arm'], 'zz']

+

+ def to_target(self):

+ """Returns the distance from the tip to the target."""

+ tip_to_target = (self.named.data.site_xpos['target'] -

+ self.named.data.site_xpos['tip'])

+ return np.linalg.norm(tip_to_target)

+

+ def orientations(self):

+ """Returns the sines and cosines of the pole angles."""

+ return np.concatenate((self.horizontal(), self.vertical()))

+

+

+class Balance(base.Task):

+ """An Acrobot `Task` to swing up and balance the pole."""

+

+ def __init__(self, sparse, random=None):

+ """Initializes an instance of `Balance`.

+

+ Args:

+ sparse: A `bool` specifying whether to use a sparse (indicator) reward.

+ random: Optional, either a `numpy.random.RandomState` instance, an

+ integer seed for creating a new `RandomState`, or None to select a seed

+ automatically (default).

+ """

+ self._sparse = sparse

+ super(Balance, self).__init__(random=random)

+

+ def initialize_episode(self, physics):

+ """Sets the state of the environment at the start of each episode.

+

+ Shoulder and elbow are set to a random position between [-pi, pi).

+

+ Args:

+ physics: An instance of `Physics`.

+ """

+ physics.named.data.qpos[

+ ['shoulder', 'elbow']] = self.random.uniform(-np.pi, np.pi, 2)

+ super(Balance, self).initialize_episode(physics)

+

+ def get_observation(self, physics):

+ """Returns an observation of pole orientation and angular velocities."""

+ obs = collections.OrderedDict()

+ obs['orientations'] = physics.orientations()

+ obs['velocity'] = physics.velocity()

+ return obs

+

+ def _get_reward(self, physics, sparse):

+ target_radius = physics.named.model.site_size['target', 0]

+ return rewards.tolerance(physics.to_target(),

+ bounds=(0, target_radius),

+ margin=0 if sparse else 1)

+

+ def get_reward(self, physics):

+ """Returns a sparse or a smooth reward, as specified in the constructor."""

+ return self._get_reward(physics, sparse=self._sparse)

diff --git a/local_dm_control_suite/acrobot.xml b/local_dm_control_suite/acrobot.xml

new file mode 100755

index 0000000..79b76d9

--- /dev/null

+++ b/local_dm_control_suite/acrobot.xml

@@ -0,0 +1,43 @@

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

diff --git a/local_dm_control_suite/ball_in_cup.py b/local_dm_control_suite/ball_in_cup.py

new file mode 100755

index 0000000..ac3e47f

--- /dev/null

+++ b/local_dm_control_suite/ball_in_cup.py

@@ -0,0 +1,100 @@

+# Copyright 2017 The dm_control Authors.

+#

+# Licensed under the Apache License, Version 2.0 (the "License");

+# you may not use this file except in compliance with the License.

+# You may obtain a copy of the License at

+#

+# http://www.apache.org/licenses/LICENSE-2.0

+#

+# Unless required by applicable law or agreed to in writing, software

+# distributed under the License is distributed on an "AS IS" BASIS,

+# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

+# See the License for the specific language governing permissions and

+# limitations under the License.

+# ============================================================================

+

+"""Ball-in-Cup Domain."""

+

+from __future__ import absolute_import

+from __future__ import division

+from __future__ import print_function

+

+import collections

+

+from dm_control import mujoco

+from dm_control.rl import control

+from local_dm_control_suite import base

+from local_dm_control_suite import common

+from dm_control.utils import containers

+

+_DEFAULT_TIME_LIMIT = 20 # (seconds)

+_CONTROL_TIMESTEP = .02 # (seconds)

+

+

+SUITE = containers.TaggedTasks()

+

+

+def get_model_and_assets():

+ """Returns a tuple containing the model XML string and a dict of assets."""

+ return common.read_model('ball_in_cup.xml'), common.ASSETS

+

+

+@SUITE.add('benchmarking', 'easy')

+def catch(time_limit=_DEFAULT_TIME_LIMIT, random=None, environment_kwargs=None):

+ """Returns the Ball-in-Cup task."""

+ physics = Physics.from_xml_string(*get_model_and_assets())

+ task = BallInCup(random=random)

+ environment_kwargs = environment_kwargs or {}

+ return control.Environment(

+ physics, task, time_limit=time_limit, control_timestep=_CONTROL_TIMESTEP,

+ **environment_kwargs)

+

+

+class Physics(mujoco.Physics):

+ """Physics with additional features for the Ball-in-Cup domain."""

+

+ def ball_to_target(self):

+ """Returns the vector from the ball to the target."""

+ target = self.named.data.site_xpos['target', ['x', 'z']]

+ ball = self.named.data.xpos['ball', ['x', 'z']]

+ return target - ball

+

+ def in_target(self):

+ """Returns 1 if the ball is in the target, 0 otherwise."""

+ ball_to_target = abs(self.ball_to_target())

+ target_size = self.named.model.site_size['target', [0, 2]]

+ ball_size = self.named.model.geom_size['ball', 0]

+ return float(all(ball_to_target < target_size - ball_size))

+

+

+class BallInCup(base.Task):

+ """The Ball-in-Cup task. Put the ball in the cup."""

+

+ def initialize_episode(self, physics):

+ """Sets the state of the environment at the start of each episode.

+

+ Args:

+ physics: An instance of `Physics`.

+

+ """

+ # Find a collision-free random initial position of the ball.

+ penetrating = True

+ while penetrating:

+ # Assign a random ball position.

+ physics.named.data.qpos['ball_x'] = self.random.uniform(-.2, .2)

+ physics.named.data.qpos['ball_z'] = self.random.uniform(.2, .5)

+ # Check for collisions.

+ physics.after_reset()

+ penetrating = physics.data.ncon > 0

+ super(BallInCup, self).initialize_episode(physics)

+

+ def get_observation(self, physics):

+ """Returns an observation of the state."""

+ obs = collections.OrderedDict()

+ obs['position'] = physics.position()

+ obs['velocity'] = physics.velocity()

+ return obs

+

+ def get_reward(self, physics):

+ """Returns a sparse reward."""

+ return physics.in_target()

diff --git a/local_dm_control_suite/ball_in_cup.xml b/local_dm_control_suite/ball_in_cup.xml

new file mode 100755

index 0000000..792073f

--- /dev/null

+++ b/local_dm_control_suite/ball_in_cup.xml

@@ -0,0 +1,54 @@

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

diff --git a/local_dm_control_suite/base.py b/local_dm_control_suite/base.py

new file mode 100755

index 0000000..fd78318

--- /dev/null

+++ b/local_dm_control_suite/base.py

@@ -0,0 +1,112 @@

+# Copyright 2017 The dm_control Authors.

+#

+# Licensed under the Apache License, Version 2.0 (the "License");

+# you may not use this file except in compliance with the License.

+# You may obtain a copy of the License at

+#

+# http://www.apache.org/licenses/LICENSE-2.0

+#

+# Unless required by applicable law or agreed to in writing, software

+# distributed under the License is distributed on an "AS IS" BASIS,

+# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

+# See the License for the specific language governing permissions and

+# limitations under the License.

+# ============================================================================

+

+"""Base class for tasks in the Control Suite."""

+

+from __future__ import absolute_import

+from __future__ import division

+from __future__ import print_function

+

+from dm_control import mujoco

+from dm_control.rl import control

+

+import numpy as np

+

+

+class Task(control.Task):

+ """Base class for tasks in the Control Suite.

+

+ Actions are mapped directly to the states of MuJoCo actuators: each element of

+ the action array is used to set the control input for a single actuator. The

+ ordering of the actuators is the same as in the corresponding MJCF XML file.

+

+ Attributes:

+ random: A `numpy.random.RandomState` instance. This should be used to

+ generate all random variables associated with the task, such as random

+ starting states, observation noise* etc.

+

+ *If sensor noise is enabled in the MuJoCo model then this will be generated

+ using MuJoCo's internal RNG, which has its own independent state.

+ """

+

+ def __init__(self, random=None):

+ """Initializes a new continuous control task.

+

+ Args:

+ random: Optional, either a `numpy.random.RandomState` instance, an integer

+ seed for creating a new `RandomState`, or None to select a seed

+ automatically (default).

+ """

+ if not isinstance(random, np.random.RandomState):

+ random = np.random.RandomState(random)

+ self._random = random

+ self._visualize_reward = False

+

+ @property

+ def random(self):

+ """Task-specific `numpy.random.RandomState` instance."""

+ return self._random

+

+ def action_spec(self, physics):

+ """Returns a `BoundedArraySpec` matching the `physics` actuators."""

+ return mujoco.action_spec(physics)

+

+ def initialize_episode(self, physics):

+ """Resets geom colors to their defaults after starting a new episode.

+

+ Subclasses of `base.Task` must delegate to this method after performing

+ their own initialization.

+

+ Args:

+ physics: An instance of `mujoco.Physics`.

+ """

+ self.after_step(physics)

+

+ def before_step(self, action, physics):

+ """Sets the control signal for the actuators to values in `action`."""

+ # Support legacy internal code.

+ action = getattr(action, "continuous_actions", action)

+ physics.set_control(action)

+

+ def after_step(self, physics):

+ """Modifies colors according to the reward."""

+ if self._visualize_reward:

+ reward = np.clip(self.get_reward(physics), 0.0, 1.0)

+ _set_reward_colors(physics, reward)

+

+ @property

+ def visualize_reward(self):

+ return self._visualize_reward

+

+ @visualize_reward.setter

+ def visualize_reward(self, value):

+ if not isinstance(value, bool):

+ raise ValueError("Expected a boolean, got {}.".format(type(value)))

+ self._visualize_reward = value

+

+

+_MATERIALS = ["self", "effector", "target"]

+_DEFAULT = [name + "_default" for name in _MATERIALS]

+_HIGHLIGHT = [name + "_highlight" for name in _MATERIALS]

+

+

+def _set_reward_colors(physics, reward):

+ """Sets the highlight, effector and target colors according to the reward."""

+ assert 0.0 <= reward <= 1.0

+ colors = physics.named.model.mat_rgba

+ default = colors[_DEFAULT]

+ highlight = colors[_HIGHLIGHT]

+ blend_coef = reward ** 4 # Better color distinction near high rewards.

+ colors[_MATERIALS] = blend_coef * highlight + (1.0 - blend_coef) * default

diff --git a/local_dm_control_suite/cartpole.py b/local_dm_control_suite/cartpole.py

new file mode 100755

index 0000000..b8fec14

--- /dev/null

+++ b/local_dm_control_suite/cartpole.py

@@ -0,0 +1,230 @@

+# Copyright 2017 The dm_control Authors.

+#

+# Licensed under the Apache License, Version 2.0 (the "License");

+# you may not use this file except in compliance with the License.

+# You may obtain a copy of the License at

+#

+# http://www.apache.org/licenses/LICENSE-2.0

+#

+# Unless required by applicable law or agreed to in writing, software

+# distributed under the License is distributed on an "AS IS" BASIS,

+# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

+# See the License for the specific language governing permissions and

+# limitations under the License.

+# ============================================================================

+

+"""Cartpole domain."""

+

+from __future__ import absolute_import

+from __future__ import division

+from __future__ import print_function

+

+import collections

+

+from dm_control import mujoco

+from dm_control.rl import control

+from local_dm_control_suite import base

+from local_dm_control_suite import common

+from dm_control.utils import containers

+from dm_control.utils import rewards

+from lxml import etree

+import numpy as np

+from six.moves import range

+

+

+_DEFAULT_TIME_LIMIT = 10

+SUITE = containers.TaggedTasks()

+

+

+def get_model_and_assets(num_poles=1):

+ """Returns a tuple containing the model XML string and a dict of assets."""

+ return _make_model(num_poles), common.ASSETS

+

+

+@SUITE.add('benchmarking')

+def balance(time_limit=_DEFAULT_TIME_LIMIT, random=None,

+ environment_kwargs=None):

+ """Returns the Cartpole Balance task."""

+ physics = Physics.from_xml_string(*get_model_and_assets())

+ task = Balance(swing_up=False, sparse=False, random=random)

+ environment_kwargs = environment_kwargs or {}

+ return control.Environment(

+ physics, task, time_limit=time_limit, **environment_kwargs)

+

+

+@SUITE.add('benchmarking')

+def balance_sparse(time_limit=_DEFAULT_TIME_LIMIT, random=None,

+ environment_kwargs=None):

+ """Returns the sparse reward variant of the Cartpole Balance task."""

+ physics = Physics.from_xml_string(*get_model_and_assets())

+ task = Balance(swing_up=False, sparse=True, random=random)

+ environment_kwargs = environment_kwargs or {}

+ return control.Environment(

+ physics, task, time_limit=time_limit, **environment_kwargs)

+

+

+@SUITE.add('benchmarking')

+def swingup(time_limit=_DEFAULT_TIME_LIMIT, random=None,

+ environment_kwargs=None):

+ """Returns the Cartpole Swing-Up task."""

+ physics = Physics.from_xml_string(*get_model_and_assets())

+ task = Balance(swing_up=True, sparse=False, random=random)

+ environment_kwargs = environment_kwargs or {}

+ return control.Environment(

+ physics, task, time_limit=time_limit, **environment_kwargs)

+

+

+@SUITE.add('benchmarking')

+def swingup_sparse(time_limit=_DEFAULT_TIME_LIMIT, random=None,

+ environment_kwargs=None):

+ """Returns the sparse reward variant of teh Cartpole Swing-Up task."""

+ physics = Physics.from_xml_string(*get_model_and_assets())

+ task = Balance(swing_up=True, sparse=True, random=random)

+ environment_kwargs = environment_kwargs or {}

+ return control.Environment(

+ physics, task, time_limit=time_limit, **environment_kwargs)

+

+

+@SUITE.add()

+def two_poles(time_limit=_DEFAULT_TIME_LIMIT, random=None,

+ environment_kwargs=None):

+ """Returns the Cartpole Balance task with two poles."""

+ physics = Physics.from_xml_string(*get_model_and_assets(num_poles=2))

+ task = Balance(swing_up=True, sparse=False, random=random)

+ environment_kwargs = environment_kwargs or {}

+ return control.Environment(

+ physics, task, time_limit=time_limit, **environment_kwargs)

+

+

+@SUITE.add()

+def three_poles(time_limit=_DEFAULT_TIME_LIMIT, random=None, num_poles=3,

+ sparse=False, environment_kwargs=None):

+ """Returns the Cartpole Balance task with three or more poles."""

+ physics = Physics.from_xml_string(*get_model_and_assets(num_poles=num_poles))

+ task = Balance(swing_up=True, sparse=sparse, random=random)

+ environment_kwargs = environment_kwargs or {}

+ return control.Environment(

+ physics, task, time_limit=time_limit, **environment_kwargs)

+

+

+def _make_model(n_poles):

+ """Generates an xml string defining a cart with `n_poles` bodies."""

+ xml_string = common.read_model('cartpole.xml')

+ if n_poles == 1:

+ return xml_string

+ mjcf = etree.fromstring(xml_string)

+ parent = mjcf.find('./worldbody/body/body') # Find first pole.

+ # Make chain of poles.

+ for pole_index in range(2, n_poles+1):

+ child = etree.Element('body', name='pole_{}'.format(pole_index),

+ pos='0 0 1', childclass='pole')

+ etree.SubElement(child, 'joint', name='hinge_{}'.format(pole_index))

+ etree.SubElement(child, 'geom', name='pole_{}'.format(pole_index))

+ parent.append(child)

+ parent = child

+ # Move plane down.

+ floor = mjcf.find('./worldbody/geom')

+ floor.set('pos', '0 0 {}'.format(1 - n_poles - .05))

+ # Move cameras back.

+ cameras = mjcf.findall('./worldbody/camera')

+ cameras[0].set('pos', '0 {} 1'.format(-1 - 2*n_poles))

+ cameras[1].set('pos', '0 {} 2'.format(-2*n_poles))

+ return etree.tostring(mjcf, pretty_print=True)

+

+

+class Physics(mujoco.Physics):

+ """Physics simulation with additional features for the Cartpole domain."""

+

+ def cart_position(self):

+ """Returns the position of the cart."""

+ return self.named.data.qpos['slider'][0]

+

+ def angular_vel(self):

+ """Returns the angular velocity of the pole."""

+ return self.data.qvel[1:]

+

+ def pole_angle_cosine(self):

+ """Returns the cosine of the pole angle."""

+ return self.named.data.xmat[2:, 'zz']

+

+ def bounded_position(self):

+ """Returns the state, with pole angle split into sin/cos."""

+ return np.hstack((self.cart_position(),

+ self.named.data.xmat[2:, ['zz', 'xz']].ravel()))

+

+

+class Balance(base.Task):

+ """A Cartpole `Task` to balance the pole.

+

+ State is initialized either close to the target configuration or at a random

+ configuration.

+ """

+ _CART_RANGE = (-.25, .25)

+ _ANGLE_COSINE_RANGE = (.995, 1)

+

+ def __init__(self, swing_up, sparse, random=None):

+ """Initializes an instance of `Balance`.

+

+ Args:

+ swing_up: A `bool`, which if `True` sets the cart to the middle of the

+ slider and the pole pointing towards the ground. Otherwise, sets the

+ cart to a random position on the slider and the pole to a random

+ near-vertical position.

+ sparse: A `bool`, whether to return a sparse or a smooth reward.

+ random: Optional, either a `numpy.random.RandomState` instance, an

+ integer seed for creating a new `RandomState`, or None to select a seed

+ automatically (default).

+ """

+ self._sparse = sparse

+ self._swing_up = swing_up

+ super(Balance, self).__init__(random=random)

+

+ def initialize_episode(self, physics):

+ """Sets the state of the environment at the start of each episode.

+

+ Initializes the cart and pole according to `swing_up`, and in both cases

+ adds a small random initial velocity to break symmetry.

+

+ Args:

+ physics: An instance of `Physics`.

+ """

+ nv = physics.model.nv

+ if self._swing_up:

+ physics.named.data.qpos['slider'] = .01*self.random.randn()

+ physics.named.data.qpos['hinge_1'] = np.pi + .01*self.random.randn()

+ physics.named.data.qpos[2:] = .1*self.random.randn(nv - 2)

+ else:

+ physics.named.data.qpos['slider'] = self.random.uniform(-.1, .1)

+ physics.named.data.qpos[1:] = self.random.uniform(-.034, .034, nv - 1)

+ physics.named.data.qvel[:] = 0.01 * self.random.randn(physics.model.nv)

+ super(Balance, self).initialize_episode(physics)

+

+ def get_observation(self, physics):

+ """Returns an observation of the (bounded) physics state."""

+ obs = collections.OrderedDict()

+ obs['position'] = physics.bounded_position()

+ obs['velocity'] = physics.velocity()

+ return obs

+

+ def _get_reward(self, physics, sparse):

+ if sparse:

+ cart_in_bounds = rewards.tolerance(physics.cart_position(),

+ self._CART_RANGE)

+ angle_in_bounds = rewards.tolerance(physics.pole_angle_cosine(),

+ self._ANGLE_COSINE_RANGE).prod()

+ return cart_in_bounds * angle_in_bounds

+ else:

+ upright = (physics.pole_angle_cosine() + 1) / 2

+ centered = rewards.tolerance(physics.cart_position(), margin=2)

+ centered = (1 + centered) / 2

+ small_control = rewards.tolerance(physics.control(), margin=1,

+ value_at_margin=0,

+ sigmoid='quadratic')[0]

+ small_control = (4 + small_control) / 5

+ small_velocity = rewards.tolerance(physics.angular_vel(), margin=5).min()

+ small_velocity = (1 + small_velocity) / 2

+ return upright.mean() * small_control * small_velocity * centered

+

+ def get_reward(self, physics):

+ """Returns a sparse or a smooth reward, as specified in the constructor."""

+ return self._get_reward(physics, sparse=self._sparse)

diff --git a/local_dm_control_suite/cartpole.xml b/local_dm_control_suite/cartpole.xml

new file mode 100755

index 0000000..e01869d

--- /dev/null

+++ b/local_dm_control_suite/cartpole.xml

@@ -0,0 +1,37 @@

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

diff --git a/local_dm_control_suite/cheetah.py b/local_dm_control_suite/cheetah.py

new file mode 100755

index 0000000..7dd2a63

--- /dev/null

+++ b/local_dm_control_suite/cheetah.py

@@ -0,0 +1,97 @@

+# Copyright 2017 The dm_control Authors.

+#

+# Licensed under the Apache License, Version 2.0 (the "License");

+# you may not use this file except in compliance with the License.

+# You may obtain a copy of the License at

+#

+# http://www.apache.org/licenses/LICENSE-2.0

+#

+# Unless required by applicable law or agreed to in writing, software

+# distributed under the License is distributed on an "AS IS" BASIS,

+# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

+# See the License for the specific language governing permissions and

+# limitations under the License.

+# ============================================================================

+

+"""Cheetah Domain."""

+

+from __future__ import absolute_import

+from __future__ import division

+from __future__ import print_function

+

+import collections

+

+from dm_control import mujoco

+from dm_control.rl import control

+from local_dm_control_suite import base

+from local_dm_control_suite import common

+from dm_control.utils import containers

+from dm_control.utils import rewards

+

+

+# How long the simulation will run, in seconds.

+_DEFAULT_TIME_LIMIT = 10

+

+# Running speed above which reward is 1.

+_RUN_SPEED = 10

+

+SUITE = containers.TaggedTasks()

+

+

+def get_model_and_assets():

+ """Returns a tuple containing the model XML string and a dict of assets."""

+ return common.read_model('cheetah.xml'), common.ASSETS

+

+

+@SUITE.add('benchmarking')

+def run(time_limit=_DEFAULT_TIME_LIMIT, random=None, environment_kwargs=None):

+ """Returns the run task."""

+ physics = Physics.from_xml_string(*get_model_and_assets())

+ task = Cheetah(random=random)

+ environment_kwargs = environment_kwargs or {}

+ return control.Environment(physics, task, time_limit=time_limit,

+ **environment_kwargs)

+

+

+class Physics(mujoco.Physics):

+ """Physics simulation with additional features for the Cheetah domain."""

+

+ def speed(self):

+ """Returns the horizontal speed of the Cheetah."""

+ return self.named.data.sensordata['torso_subtreelinvel'][0]

+

+

+class Cheetah(base.Task):

+ """A `Task` to train a running Cheetah."""

+

+ def initialize_episode(self, physics):

+ """Sets the state of the environment at the start of each episode."""

+ # The indexing below assumes that all joints have a single DOF.

+ assert physics.model.nq == physics.model.njnt

+ is_limited = physics.model.jnt_limited == 1

+ lower, upper = physics.model.jnt_range[is_limited].T

+ physics.data.qpos[is_limited] = self.random.uniform(lower, upper)

+

+ # Stabilize the model before the actual simulation.

+ for _ in range(200):

+ physics.step()

+

+ physics.data.time = 0

+ self._timeout_progress = 0

+ super(Cheetah, self).initialize_episode(physics)

+

+ def get_observation(self, physics):

+ """Returns an observation of the state, ignoring horizontal position."""

+ obs = collections.OrderedDict()

+ # Ignores horizontal position to maintain translational invariance.

+ obs['position'] = physics.data.qpos[1:].copy()

+ obs['velocity'] = physics.velocity()

+ return obs

+

+ def get_reward(self, physics):

+ """Returns a reward to the agent."""

+ return rewards.tolerance(physics.speed(),

+ bounds=(_RUN_SPEED, float('inf')),

+ margin=_RUN_SPEED,

+ value_at_margin=0,

+ sigmoid='linear')

diff --git a/local_dm_control_suite/cheetah.xml b/local_dm_control_suite/cheetah.xml

new file mode 100755

index 0000000..1952b5e

--- /dev/null

+++ b/local_dm_control_suite/cheetah.xml

@@ -0,0 +1,73 @@

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

diff --git a/local_dm_control_suite/common/__init__.py b/local_dm_control_suite/common/__init__.py

new file mode 100755

index 0000000..62eab26

--- /dev/null

+++ b/local_dm_control_suite/common/__init__.py

@@ -0,0 +1,39 @@

+# Copyright 2017 The dm_control Authors.

+#

+# Licensed under the Apache License, Version 2.0 (the "License");

+# you may not use this file except in compliance with the License.

+# You may obtain a copy of the License at

+#

+# http://www.apache.org/licenses/LICENSE-2.0

+#

+# Unless required by applicable law or agreed to in writing, software

+# distributed under the License is distributed on an "AS IS" BASIS,

+# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

+# See the License for the specific language governing permissions and

+# limitations under the License.

+# ============================================================================

+

+"""Functions to manage the common assets for domains."""

+

+from __future__ import absolute_import

+from __future__ import division

+from __future__ import print_function

+

+import os

+from dm_control.utils import io as resources

+

+_SUITE_DIR = os.path.dirname(os.path.dirname(__file__))

+_FILENAMES = [

+ "./common/materials.xml",

+ "./common/materials_white_floor.xml",

+ "./common/skybox.xml",

+ "./common/visual.xml",

+]

+

+ASSETS = {filename: resources.GetResource(os.path.join(_SUITE_DIR, filename))

+ for filename in _FILENAMES}

+

+

+def read_model(model_filename):

+ """Reads a model XML file and returns its contents as a string."""

+ return resources.GetResource(os.path.join(_SUITE_DIR, model_filename))

diff --git a/local_dm_control_suite/common/materials.xml b/local_dm_control_suite/common/materials.xml

new file mode 100755

index 0000000..5a3b169

--- /dev/null

+++ b/local_dm_control_suite/common/materials.xml

@@ -0,0 +1,23 @@

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

diff --git a/local_dm_control_suite/common/materials_white_floor.xml b/local_dm_control_suite/common/materials_white_floor.xml

new file mode 100755

index 0000000..a1e35c2

--- /dev/null

+++ b/local_dm_control_suite/common/materials_white_floor.xml

@@ -0,0 +1,23 @@

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

diff --git a/local_dm_control_suite/common/skybox.xml b/local_dm_control_suite/common/skybox.xml

new file mode 100755

index 0000000..b888692

--- /dev/null

+++ b/local_dm_control_suite/common/skybox.xml

@@ -0,0 +1,6 @@

+

+

+

+

+

diff --git a/local_dm_control_suite/common/visual.xml b/local_dm_control_suite/common/visual.xml

new file mode 100755

index 0000000..ede15ad

--- /dev/null

+++ b/local_dm_control_suite/common/visual.xml

@@ -0,0 +1,7 @@

+

+

+

+

+

+

+

diff --git a/local_dm_control_suite/demos/mocap_demo.py b/local_dm_control_suite/demos/mocap_demo.py

new file mode 100755

index 0000000..2e2c7ca

--- /dev/null

+++ b/local_dm_control_suite/demos/mocap_demo.py

@@ -0,0 +1,84 @@

+# Copyright 2017 The dm_control Authors.

+#

+# Licensed under the Apache License, Version 2.0 (the "License");

+# you may not use this file except in compliance with the License.

+# You may obtain a copy of the License at

+#

+# http://www.apache.org/licenses/LICENSE-2.0

+#

+# Unless required by applicable law or agreed to in writing, software

+# distributed under the License is distributed on an "AS IS" BASIS,

+# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

+# See the License for the specific language governing permissions and

+# limitations under the License.

+# ============================================================================

+

+"""Demonstration of amc parsing for CMU mocap database.

+

+To run the demo, supply a path to a `.amc` file:

+

+ python mocap_demo --filename='path/to/mocap.amc'

+

+CMU motion capture clips are available at mocap.cs.cmu.edu

+"""

+

+from __future__ import absolute_import

+from __future__ import division

+from __future__ import print_function

+

+import time

+# Internal dependencies.

+

+from absl import app

+from absl import flags

+

+from local_dm_control_suite import humanoid_CMU

+from dm_control.suite.utils import parse_amc

+

+import matplotlib.pyplot as plt

+import numpy as np

+

+FLAGS = flags.FLAGS

+flags.DEFINE_string('filename', None, 'amc file to be converted.')

+flags.DEFINE_integer('max_num_frames', 90,

+ 'Maximum number of frames for plotting/playback')

+

+

+def main(unused_argv):

+ env = humanoid_CMU.stand()

+

+ # Parse and convert specified clip.

+ converted = parse_amc.convert(FLAGS.filename,

+ env.physics, env.control_timestep())

+

+ max_frame = min(FLAGS.max_num_frames, converted.qpos.shape[1] - 1)

+

+ width = 480

+ height = 480

+ video = np.zeros((max_frame, height, 2 * width, 3), dtype=np.uint8)

+

+ for i in range(max_frame):

+ p_i = converted.qpos[:, i]

+ with env.physics.reset_context():

+ env.physics.data.qpos[:] = p_i

+ video[i] = np.hstack([env.physics.render(height, width, camera_id=0),

+ env.physics.render(height, width, camera_id=1)])

+

+ tic = time.time()

+ for i in range(max_frame):

+ if i == 0:

+ img = plt.imshow(video[i])

+ else:

+ img.set_data(video[i])

+ toc = time.time()

+ clock_dt = toc - tic

+ tic = time.time()

+ # Real-time playback not always possible as clock_dt > .03

+ plt.pause(max(0.01, 0.03 - clock_dt)) # Need min display time > 0.0.

+ plt.draw()

+ plt.waitforbuttonpress()

+

+

+if __name__ == '__main__':

+ flags.mark_flag_as_required('filename')

+ app.run(main)

diff --git a/local_dm_control_suite/demos/zeros.amc b/local_dm_control_suite/demos/zeros.amc

new file mode 100755

index 0000000..b4590a4

--- /dev/null

+++ b/local_dm_control_suite/demos/zeros.amc

@@ -0,0 +1,213 @@

+#DUMMY AMC for testing

+:FULLY-SPECIFIED

+:DEGREES

+1

+root 0 0 0 0 0 0

+lowerback 0 0 0

+upperback 0 0 0

+thorax 0 0 0

+lowerneck 0 0 0

+upperneck 0 0 0

+head 0 0 0

+rclavicle 0 0

+rhumerus 0 0 0

+rradius 0

+rwrist 0

+rhand 0 0

+rfingers 0

+rthumb 0 0

+lclavicle 0 0

+lhumerus 0 0 0

+lradius 0

+lwrist 0

+lhand 0 0

+lfingers 0

+lthumb 0 0

+rfemur 0 0 0

+rtibia 0

+rfoot 0 0

+rtoes 0

+lfemur 0 0 0

+ltibia 0

+lfoot 0 0

+ltoes 0

+2

+root 0 0 0 0 0 0

+lowerback 0 0 0

+upperback 0 0 0

+thorax 0 0 0

+lowerneck 0 0 0

+upperneck 0 0 0

+head 0 0 0

+rclavicle 0 0

+rhumerus 0 0 0

+rradius 0

+rwrist 0

+rhand 0 0

+rfingers 0

+rthumb 0 0

+lclavicle 0 0

+lhumerus 0 0 0

+lradius 0

+lwrist 0

+lhand 0 0

+lfingers 0

+lthumb 0 0

+rfemur 0 0 0

+rtibia 0

+rfoot 0 0

+rtoes 0

+lfemur 0 0 0

+ltibia 0

+lfoot 0 0

+ltoes 0

+3

+root 0 0 0 0 0 0

+lowerback 0 0 0

+upperback 0 0 0

+thorax 0 0 0

+lowerneck 0 0 0

+upperneck 0 0 0

+head 0 0 0

+rclavicle 0 0

+rhumerus 0 0 0

+rradius 0

+rwrist 0

+rhand 0 0

+rfingers 0

+rthumb 0 0

+lclavicle 0 0

+lhumerus 0 0 0

+lradius 0

+lwrist 0

+lhand 0 0

+lfingers 0

+lthumb 0 0

+rfemur 0 0 0

+rtibia 0

+rfoot 0 0

+rtoes 0

+lfemur 0 0 0

+ltibia 0

+lfoot 0 0

+ltoes 0

+4

+root 0 0 0 0 0 0

+lowerback 0 0 0

+upperback 0 0 0

+thorax 0 0 0

+lowerneck 0 0 0

+upperneck 0 0 0

+head 0 0 0

+rclavicle 0 0

+rhumerus 0 0 0

+rradius 0

+rwrist 0

+rhand 0 0

+rfingers 0

+rthumb 0 0

+lclavicle 0 0

+lhumerus 0 0 0

+lradius 0

+lwrist 0

+lhand 0 0

+lfingers 0

+lthumb 0 0

+rfemur 0 0 0

+rtibia 0

+rfoot 0 0

+rtoes 0

+lfemur 0 0 0

+ltibia 0

+lfoot 0 0

+ltoes 0

+5

+root 0 0 0 0 0 0

+lowerback 0 0 0

+upperback 0 0 0

+thorax 0 0 0

+lowerneck 0 0 0

+upperneck 0 0 0

+head 0 0 0

+rclavicle 0 0

+rhumerus 0 0 0

+rradius 0

+rwrist 0

+rhand 0 0

+rfingers 0

+rthumb 0 0

+lclavicle 0 0

+lhumerus 0 0 0

+lradius 0

+lwrist 0

+lhand 0 0

+lfingers 0

+lthumb 0 0

+rfemur 0 0 0

+rtibia 0

+rfoot 0 0

+rtoes 0

+lfemur 0 0 0

+ltibia 0

+lfoot 0 0

+ltoes 0

+6

+root 0 0 0 0 0 0

+lowerback 0 0 0

+upperback 0 0 0

+thorax 0 0 0

+lowerneck 0 0 0

+upperneck 0 0 0

+head 0 0 0

+rclavicle 0 0

+rhumerus 0 0 0

+rradius 0

+rwrist 0

+rhand 0 0

+rfingers 0

+rthumb 0 0

+lclavicle 0 0

+lhumerus 0 0 0

+lradius 0

+lwrist 0

+lhand 0 0

+lfingers 0

+lthumb 0 0

+rfemur 0 0 0

+rtibia 0

+rfoot 0 0

+rtoes 0

+lfemur 0 0 0

+ltibia 0

+lfoot 0 0

+ltoes 0

+7

+root 0 0 0 0 0 0

+lowerback 0 0 0

+upperback 0 0 0

+thorax 0 0 0

+lowerneck 0 0 0

+upperneck 0 0 0

+head 0 0 0

+rclavicle 0 0

+rhumerus 0 0 0

+rradius 0

+rwrist 0

+rhand 0 0

+rfingers 0

+rthumb 0 0

+lclavicle 0 0

+lhumerus 0 0 0

+lradius 0

+lwrist 0

+lhand 0 0

+lfingers 0

+lthumb 0 0

+rfemur 0 0 0

+rtibia 0

+rfoot 0 0

+rtoes 0

+lfemur 0 0 0

+ltibia 0

+lfoot 0 0

+ltoes 0

diff --git a/local_dm_control_suite/explore.py b/local_dm_control_suite/explore.py

new file mode 100755

index 0000000..06fb0a8

--- /dev/null

+++ b/local_dm_control_suite/explore.py

@@ -0,0 +1,84 @@

+# Copyright 2018 The dm_control Authors.

+#

+# Licensed under the Apache License, Version 2.0 (the "License");

+# you may not use this file except in compliance with the License.

+# You may obtain a copy of the License at

+#

+# http://www.apache.org/licenses/LICENSE-2.0

+#

+# Unless required by applicable law or agreed to in writing, software

+# distributed under the License is distributed on an "AS IS" BASIS,

+# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

+# See the License for the specific language governing permissions and

+# limitations under the License.

+# ============================================================================

+"""Control suite environments explorer."""

+

+from __future__ import absolute_import

+from __future__ import division

+from __future__ import print_function

+

+from absl import app

+from absl import flags

+from dm_control import suite

+from dm_control.suite.wrappers import action_noise

+from six.moves import input

+

+from dm_control import viewer

+

+

+_ALL_NAMES = ['.'.join(domain_task) for domain_task in suite.ALL_TASKS]

+

+flags.DEFINE_enum('environment_name', None, _ALL_NAMES,

+ 'Optional \'domain_name.task_name\' pair specifying the '

+ 'environment to load. If unspecified a prompt will appear to '

+ 'select one.')

+flags.DEFINE_bool('timeout', True, 'Whether episodes should have a time limit.')

+flags.DEFINE_bool('visualize_reward', True,

+ 'Whether to vary the colors of geoms according to the '

+ 'current reward value.')

+flags.DEFINE_float('action_noise', 0.,

+ 'Standard deviation of Gaussian noise to apply to actions, '

+ 'expressed as a fraction of the max-min range for each '

+ 'action dimension. Defaults to 0, i.e. no noise.')

+FLAGS = flags.FLAGS

+

+

+def prompt_environment_name(prompt, values):

+ environment_name = None

+ while not environment_name:

+ environment_name = input(prompt)

+ if not environment_name or values.index(environment_name) < 0:

+ print('"%s" is not a valid environment name.' % environment_name)

+ environment_name = None

+ return environment_name

+

+

+def main(argv):

+ del argv

+ environment_name = FLAGS.environment_name

+ if environment_name is None:

+ print('\n '.join(['Available environments:'] + _ALL_NAMES))

+ environment_name = prompt_environment_name(

+ 'Please select an environment name: ', _ALL_NAMES)

+

+ index = _ALL_NAMES.index(environment_name)

+ domain_name, task_name = suite.ALL_TASKS[index]

+

+ task_kwargs = {}

+ if not FLAGS.timeout:

+ task_kwargs['time_limit'] = float('inf')

+

+ def loader():

+ env = suite.load(

+ domain_name=domain_name, task_name=task_name, task_kwargs=task_kwargs)

+ env.task.visualize_reward = FLAGS.visualize_reward

+ if FLAGS.action_noise > 0:

+ env = action_noise.Wrapper(env, scale=FLAGS.action_noise)

+ return env

+

+ viewer.launch(loader)

+

+

+if __name__ == '__main__':

+ app.run(main)

diff --git a/local_dm_control_suite/finger.py b/local_dm_control_suite/finger.py

new file mode 100755

index 0000000..e700db6

--- /dev/null

+++ b/local_dm_control_suite/finger.py

@@ -0,0 +1,217 @@

+# Copyright 2017 The dm_control Authors.

+#

+# Licensed under the Apache License, Version 2.0 (the "License");

+# you may not use this file except in compliance with the License.

+# You may obtain a copy of the License at

+#

+# http://www.apache.org/licenses/LICENSE-2.0

+#

+# Unless required by applicable law or agreed to in writing, software

+# distributed under the License is distributed on an "AS IS" BASIS,

+# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

+# See the License for the specific language governing permissions and

+# limitations under the License.

+# ============================================================================

+

+"""Finger Domain."""

+

+from __future__ import absolute_import

+from __future__ import division

+from __future__ import print_function

+

+import collections

+

+from dm_control import mujoco

+from dm_control.rl import control

+from local_dm_control_suite import base

+from local_dm_control_suite import common

+from dm_control.suite.utils import randomizers

+from dm_control.utils import containers

+import numpy as np

+from six.moves import range

+

+_DEFAULT_TIME_LIMIT = 20 # (seconds)

+_CONTROL_TIMESTEP = .02 # (seconds)

+# For TURN tasks, the 'tip' geom needs to enter a spherical target of sizes:

+_EASY_TARGET_SIZE = 0.07

+_HARD_TARGET_SIZE = 0.03

+# Initial spin velocity for the Stop task.

+_INITIAL_SPIN_VELOCITY = 100

+# Spinning slower than this value (radian/second) is considered stopped.

+_STOP_VELOCITY = 1e-6

+# Spinning faster than this value (radian/second) is considered spinning.

+_SPIN_VELOCITY = 15.0

+

+

+SUITE = containers.TaggedTasks()

+

+

+def get_model_and_assets():

+ """Returns a tuple containing the model XML string and a dict of assets."""

+ return common.read_model('finger.xml'), common.ASSETS

+

+

+@SUITE.add('benchmarking')

+def spin(time_limit=_DEFAULT_TIME_LIMIT, random=None, environment_kwargs=None):

+ """Returns the Spin task."""

+ physics = Physics.from_xml_string(*get_model_and_assets())

+ task = Spin(random=random)

+ environment_kwargs = environment_kwargs or {}

+ return control.Environment(

+ physics, task, time_limit=time_limit, control_timestep=_CONTROL_TIMESTEP,

+ **environment_kwargs)

+

+

+@SUITE.add('benchmarking')

+def turn_easy(time_limit=_DEFAULT_TIME_LIMIT, random=None,

+ environment_kwargs=None):

+ """Returns the easy Turn task."""

+ physics = Physics.from_xml_string(*get_model_and_assets())

+ task = Turn(target_radius=_EASY_TARGET_SIZE, random=random)

+ environment_kwargs = environment_kwargs or {}

+ return control.Environment(

+ physics, task, time_limit=time_limit, control_timestep=_CONTROL_TIMESTEP,

+ **environment_kwargs)

+

+

+@SUITE.add('benchmarking')

+def turn_hard(time_limit=_DEFAULT_TIME_LIMIT, random=None,

+ environment_kwargs=None):

+ """Returns the hard Turn task."""

+ physics = Physics.from_xml_string(*get_model_and_assets())

+ task = Turn(target_radius=_HARD_TARGET_SIZE, random=random)

+ environment_kwargs = environment_kwargs or {}

+ return control.Environment(

+ physics, task, time_limit=time_limit, control_timestep=_CONTROL_TIMESTEP,

+ **environment_kwargs)

+

+

+class Physics(mujoco.Physics):

+ """Physics simulation with additional features for the Finger domain."""

+

+ def touch(self):

+ """Returns logarithmically scaled signals from the two touch sensors."""

+ return np.log1p(self.named.data.sensordata[['touchtop', 'touchbottom']])

+

+ def hinge_velocity(self):

+ """Returns the velocity of the hinge joint."""

+ return self.named.data.sensordata['hinge_velocity']

+

+ def tip_position(self):

+ """Returns the (x,z) position of the tip relative to the hinge."""

+ return (self.named.data.sensordata['tip'][[0, 2]] -

+ self.named.data.sensordata['spinner'][[0, 2]])

+

+ def bounded_position(self):

+ """Returns the positions, with the hinge angle replaced by tip position."""

+ return np.hstack((self.named.data.sensordata[['proximal', 'distal']],

+ self.tip_position()))

+

+ def velocity(self):

+ """Returns the velocities (extracted from sensordata)."""

+ return self.named.data.sensordata[['proximal_velocity',

+ 'distal_velocity',

+ 'hinge_velocity']]

+

+ def target_position(self):

+ """Returns the (x,z) position of the target relative to the hinge."""

+ return (self.named.data.sensordata['target'][[0, 2]] -

+ self.named.data.sensordata['spinner'][[0, 2]])

+

+ def to_target(self):

+ """Returns the vector from the tip to the target."""

+ return self.target_position() - self.tip_position()

+

+ def dist_to_target(self):

+ """Returns the signed distance to the target surface, negative is inside."""

+ return (np.linalg.norm(self.to_target()) -

+ self.named.model.site_size['target', 0])

+

+

+class Spin(base.Task):

+ """A Finger `Task` to spin the stopped body."""

+

+ def __init__(self, random=None):

+ """Initializes a new `Spin` instance.

+

+ Args:

+ random: Optional, either a `numpy.random.RandomState` instance, an

+ integer seed for creating a new `RandomState`, or None to select a seed

+ automatically (default).

+ """

+ super(Spin, self).__init__(random=random)

+

+ def initialize_episode(self, physics):

+ physics.named.model.site_rgba['target', 3] = 0

+ physics.named.model.site_rgba['tip', 3] = 0

+ physics.named.model.dof_damping['hinge'] = .03

+ _set_random_joint_angles(physics, self.random)

+ super(Spin, self).initialize_episode(physics)

+

+ def get_observation(self, physics):

+ """Returns state and touch sensors, and target info."""

+ obs = collections.OrderedDict()

+ obs['position'] = physics.bounded_position()

+ obs['velocity'] = physics.velocity()

+ obs['touch'] = physics.touch()

+ return obs

+

+ def get_reward(self, physics):

+ """Returns a sparse reward."""

+ return float(physics.hinge_velocity() <= -_SPIN_VELOCITY)

+

+

+class Turn(base.Task):

+ """A Finger `Task` to turn the body to a target angle."""

+

+ def __init__(self, target_radius, random=None):

+ """Initializes a new `Turn` instance.

+

+ Args:

+ target_radius: Radius of the target site, which specifies the goal angle.

+ random: Optional, either a `numpy.random.RandomState` instance, an

+ integer seed for creating a new `RandomState`, or None to select a seed

+ automatically (default).

+ """

+ self._target_radius = target_radius

+ super(Turn, self).__init__(random=random)

+

+ def initialize_episode(self, physics):

+ target_angle = self.random.uniform(-np.pi, np.pi)

+ hinge_x, hinge_z = physics.named.data.xanchor['hinge', ['x', 'z']]

+ radius = physics.named.model.geom_size['cap1'].sum()

+ target_x = hinge_x + radius * np.sin(target_angle)

+ target_z = hinge_z + radius * np.cos(target_angle)

+ physics.named.model.site_pos['target', ['x', 'z']] = target_x, target_z

+ physics.named.model.site_size['target', 0] = self._target_radius

+

+ _set_random_joint_angles(physics, self.random)

+

+ super(Turn, self).initialize_episode(physics)

+

+ def get_observation(self, physics):

+ """Returns state, touch sensors, and target info."""

+ obs = collections.OrderedDict()

+ obs['position'] = physics.bounded_position()

+ obs['velocity'] = physics.velocity()

+ obs['touch'] = physics.touch()

+ obs['target_position'] = physics.target_position()

+ obs['dist_to_target'] = physics.dist_to_target()

+ return obs

+

+ def get_reward(self, physics):

+ return float(physics.dist_to_target() <= 0)

+

+

+def _set_random_joint_angles(physics, random, max_attempts=1000):

+ """Sets the joints to a random collision-free state."""

+

+ for _ in range(max_attempts):

+ randomizers.randomize_limited_and_rotational_joints(physics, random)

+ # Check for collisions.

+ physics.after_reset()

+ if physics.data.ncon == 0:

+ break

+ else:

+ raise RuntimeError('Could not find a collision-free state '

+ 'after {} attempts'.format(max_attempts))